How do I explain this? Perhaps the summary is best left at: "When I was in college I always wanted a cool robot dog to smoke weed with." Through my hard work, I feel I have discovered something very important - actual dogs were the best all along.

This algorithm is sort of a "behavioral cloning" algorithm. For example, when someone drove it towards soda cans, it "learned" the behavior of driving towards soda cans.

Every revision has used an MMP5, a robot platform from themachinelab.com. I eventually managed to get a lot of it inside that chassis, based around a NanoPC-T4. A Kinect is glued on top, and an SMA antenna jack is mounted behind it where the antenna pokes up like a tail so that it looks cool.

The earlier hardware was based around a case-mod. Directly on top of the robot platform was a desktop case containing much of the electronics. It had a power supply meant for a car dashboard PC and a separate power supply for the robot platform, both running off a large 24 volt LiFePO4 battery. The motherboard was a low-power mini-ITX Intel Atom board. There were two USB peripherals, a first generation Microsoft Kinect and an Arduino handling motor control.

One thing I planned to study at length is human/robot interaction. The first hardware revision was based around a netbook, a robot I called alice, and people's first impression was very favorable. I felt I needed the extra computational power of a desktop I had at the time. I have since realized the computational power of the netbook would have been fine for this purpose, as the actual AI software runs over the LAN on my desktop. Now I am on the fourth revision, it's just hardwired to my desktop.

In the third revision there was other hardware involved, four computers total. The bulk of the work is done by my desktop, a unix-type system. The computer that was actually on the robot was also a unix-like OS. The setup uses a lot of 9P protocol components which are distributed among the four computers.

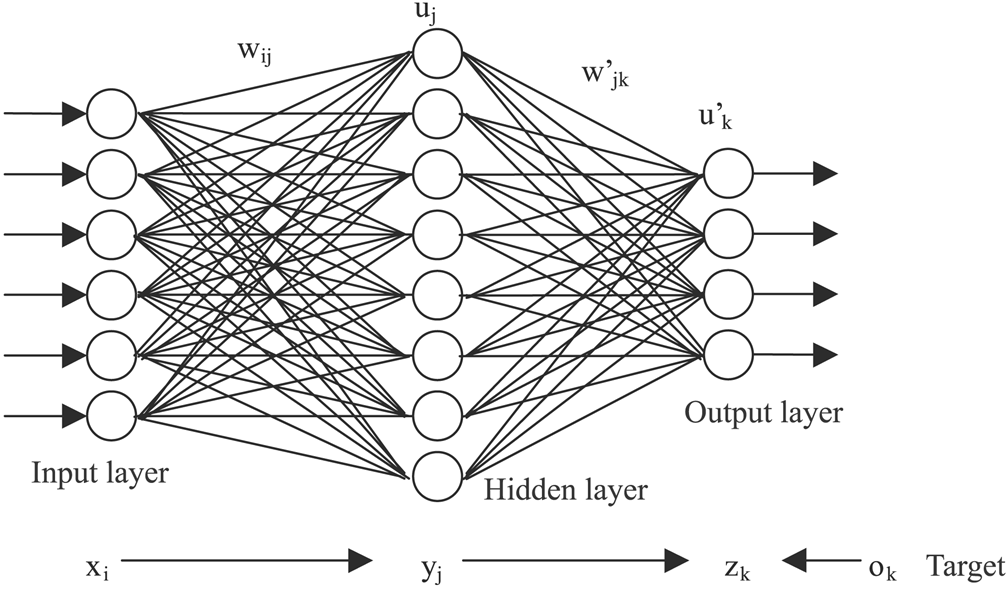

This is the best part. Deep learning algorithms are based on neuroscience. The simplest example, perhaps, is three-layer linear back-propagation, as shown below. This can be extended with many more layers, different types of nodes and interconnections, loopbacks, different types of inputs and outputs, etc. The inputs can be pictures, text, audio, whatever... the outputs are typically threshholded "bits" which can be high or low, more like analog than digital. If you desire these algorithms to do something, they have to be "trained" which is simply showing it examples of different inputs and adjusting everything by looking at the error on the outputs from known examples. From there, mathematically it's the same as the calculus of an idealized ball rolling down a hill, but in the N dimensional "data space" of all the input nodes in fact as "dimensions" in the "space" of all possible inputs.

I had to modify libfann by added leaky rectifying linear units, at this point a relatively new type of activation function found to work well for artificial neural networks, or ANNs. I thought about going through the process of adding GPU support, but I have not even begun to at this point. I'm finding it works pretty well without parallelization at all on my i5 desktop. If I run everything on the robot itself it's pretty slow, but this is possible if I wanted to take it away from network connectivity. I had bad luck with libfann's openmp support. The training algorithms are somehow different than a simple fann_train(). The network I started with has 64,000 units arranged in five layers. There are 60,000 input units, three hidden layers of 1,000 units each, and a 1,000 unit output layer.

This is my third attempt at a libfann-based driver AI for the hEather robot. All three are online at https://github.com/echoline/fanny. For this attempt I thought a lot about design before getting started. This is a recurrent ANN, meaning I put some output back in as input. I thought about the nature of a mind in the creation of this ANN. One thing the mind constantly does is predict not only the immediate future, but the present moment itself. Thus, 36,000 inputs are the last ten inputs from the camera. I do not use the camera input directly but instead "convolve" with greater detail towards the center, and predict and measure the error from the current frame instead of using it as input. There are six output "bins". 600 output neurons are devoted to minimizing the error of the present moment based on the last ten frames of input. The AI minimizes on the output bin it most closely matches. This error I call "surprise," as it is the error of the robot's prediction of the current moment. As the surprise drops, another variable, which I called "fidget" rises. When the robot is less surprised it fidgets and moves around trying to learn its surroundings.

100 output units are dedicated as "motor neurons." In my design plan, these train on actually the standard deviation and average across all 100 units, but I made a mistake in the math for this. I have no idea why it works so well, and only with libfann. The motor outputs are arranged into 5 bins, 20 units apiece. The robot has 5 behaviors, tilt up, tilt down, left, right, and forward. If any output bin is greater than all the others combined, the robot performs the given action. It can be driven by setting the bins manually, and learns to mimic those behaviors.

Also worth mentioning are a couple other things I tried in this program. Some output neurons during training are "held" at the value they just outputted. So while everything else minimizes on some other kind of error, these output neurons minimize to their last value, holding somewhat constant at whatever error from 0 they have accumulated. I input a few other variables, including one hidden layer in its entirety, the input from the kinect's accelerometer, and all of the last 10 outputs.

This is the part that gathers the input from a Kinect. It's a 9P fileserver which wraps libfreenect into a filesystem API for any system that supports the Plan 9 protocol. Source is available here. This is a pretty standard approach to 9P/Plan9.

After compilation, which requires libjpeg, libixp, and libfreenect, kinectfs takes a single argument, an address in the form tcp!ip!port or unix!/path to listen on. After it's running, you can mount it with anything that can mount 9P. I like to use ixpc, included with libixp, or 9mount.

Kinectfs is a 9P fileserver, which is a virtual filesystem. In this case, mounted at /n/kinect, it shows a directory listing:

cpu% ls /n/kinect /n/kinect/bw.jpg /n/kinect/bw.pnm /n/kinect/color.jpg /n/kinect/color.pnm /n/kinect/depth.jpg /n/kinect/depth.pnm /n/kinect/edge.jpg /n/kinect/edge.pnm /n/kinect/extra.jpg /n/kinect/extra.pnm /n/kinect/led /n/kinect/tilt

One can mount or interact with 9P fileservers from other OSes as well. On opening any of the .pnm or .jpg files, the software uses the Kinect to take a picture, and reading the opened file gets the image data of the snapshot. Reading and writing from the files tilt and led get and set status information for the tilt servo and LED on the Kinect. Writing valid integers to color.pnm and depth.pnm set the image formats for the color camera and depth camera.

It works surprisingly well, especially considering that I didn't finish the motor output code correctly before it started working fine. It's exciting and a bit frightening to think, at this point in history there are probably computers powerful enough to simulate a human in real-time. It's probably not the best approach. An AI ends up having "psychology" of its own which can be planned out in the design. Both the technology and understanding of neuroscience are making rapid process. Within a few short years, some major philosophical questions will be answered.

A thermostat is a simple machine that adjusts itself to keep a stable temperature. With so many complicated machines now that learn from input, I feel we must consider impact before benefit.

No one will know if robots are really thinking with strong AI until there is one with enough computational power and good enough programming that we can just discuss these philosophical issues with it. It should be handled carefully. A human being on average has about 86,000,000,000 brain cells, each one connected to thousands of others. A short time after we will be able to simulate that many, we will be able to simulate a thousand times as many. This clearly shows we have a responsibility to do it right and teach the right things to such an advanced algorithm.

The general public continues to doubt it is possible at all after so much history of robots in fiction. People continue having the misconception that computers can't write poetry, and continue leaving the right kinds of poetry out of their input data.